At xTerra Robotics, we're dedicated to pushing the boundaries of legged mobility, developing solutions for a diverse range of applications. Our recent technical deep dive at ROSCon India 2024, presented by our co-founder Amritanshu Manu, offered a fascinating look into the intricate software architecture that brings our robots to life. This post expands on those insights, detailing the core components, strategic design choices, and advanced algorithms that enable efficient, real-time locomotion on even low-power compute platforms.

The Sense-Plan-Act Loop: The Heart of Legged Robot Intelligence

All robots, including our legged platforms, operate on a fundamental Sense-Plan-Act loop that must run at a fast enough rate to enable dynamic movement. Our robots are equipped with an array of sensors, including a depth camera, IMU (inertial measurement unit), and joint encoders at each of their 12 actuated joints, providing crucial proprioceptive data about the robot's state and its environment. This constant feedback loop is essential for adapting to real-world conditions.

Core Architectural Components: A Model-Based Approach

Our locomotion software is built upon a model-based architecture, utilizing a detailed understanding of the robot's dynamics and kinematics. This forms the foundation for three interconnected core components:

1. Locomotion Software (The PEC Cycle)

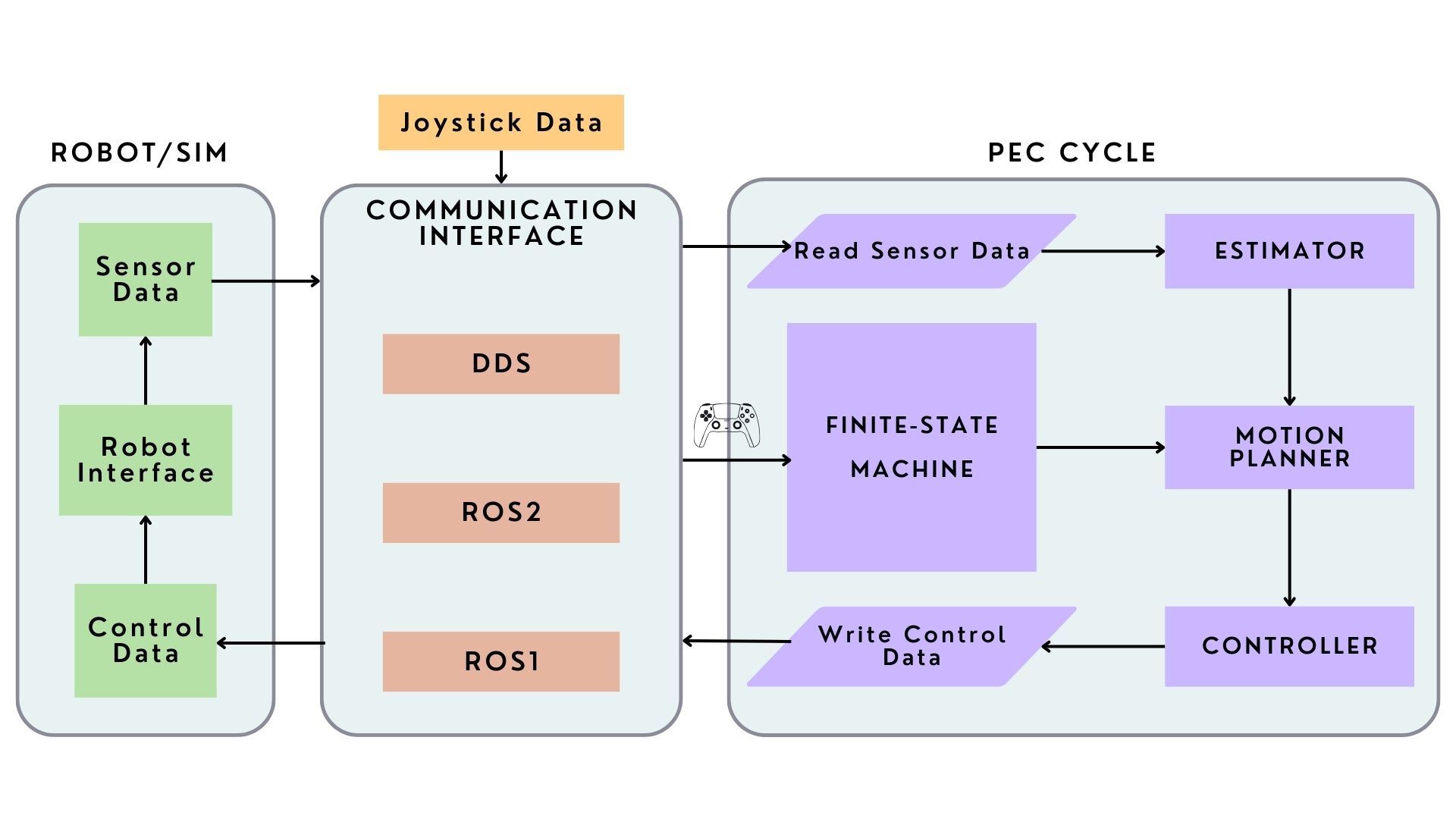

- This is the brain of the robot's movement, operating as a Planner-Estimator-Controller (PEC) cycle.

- The Motion Planner takes user commands (e.g., desired velocity) and calculates the robot's next footstep and joint movements, creating a reference state.

- The Estimator continuously determines the robot's current state (velocity, orientation, limb position) using data from proprioceptive sensors like the IMU and joint encoders.

- The Controller then computes the precise torques required at each joint to achieve the planned movement.

2. Perception and Navigation Stack

- This critical stack utilizes sensor data to build an understanding of the environment and plan paths.

- We leverage NVIDIA's Isaac ROS libraries for Visual SLAM (Simultaneous Localization and Mapping), using depth camera and IMU data to compute odometry and poses.

- The NV Blocks Toolbox takes these poses, depth, and color images to perform scene reconstruction, generating signed distance fields of the environment. It also handles static and dynamic scene construction and maintains a free-space layer for dynamic obstacle avoidance.

- Finally, the Nav2 Toolbox processes the reconstructed scene data, and given specified waypoints, computes commanded velocities that are then passed to the Locomotion software.

3. Interfaces for Seamless Operation

- Communication Interface: Designed for flexibility, this module supports ROS 1, ROS 2, and DDS, enabling seamless data flow between different software blocks and hardware.

- Hardware Interface: This bridges our Locomotion software with the low-level motor interfaces, translating commands into physical actuation.

- Simulation Interface: For development and testing, custom Gazebo plugins interact with the robot's URDF (Unified Robot Description Format) to simulate base, joint, and contact states, including custom contact models and motor control loops.

Strategic Design Choices & Optimization for Real-Time Performance

The challenge for modern legged robots lies in executing sophisticated locomotion algorithms on power-constrained hardware while maintaining real-time performance. Our architectural decisions and algorithmic optimizations are specifically tailored to address this:

1. Synchronous PEC Cycle

Initially, our planner, estimator, and controller ran as asynchronous ROS 1 nodes, leading to synchronization issues and performance degradation on hardware. We made a pivotal design change to make the PEC cycle synchronous, ensuring these three computations happen sequentially. This also significantly improved code modularity, allowing for easy switching between different implementations of each block.

2. Hybrid Middleware Approach

- For the core Locomotion software, we made a conscious decision to use bare DDS (Data Distribution Service) instead of ROS 2. This choice provided direct access to Quality of Service (QoS) features and avoided the "overhead that ROS 2 brings in," while still maintaining interoperability with ROS 2 (as ROS 2 itself uses DDS). This streamlined approach allows our PEC cycle to run robustly at 500 Hz on a Raspberry Pi 4B with moderate CPU usage (65-68%).

- For the Perception and Navigation stack, we fully leverage the ROS 2 ecosystem due to its well-developed tools and ease of integration.

3. Modular Code and Finite State Machine (FSM)

Our revised architecture isolates communication code into a separate, switchable module. We also implemented an FSM to easily switch between different robot behaviors, such as moving, standing, sleeping, or performing specific tasks.

Advanced Algorithmic Approaches for Efficient Locomotion

To achieve computational efficiency without compromising stability or performance, we employ several advanced algorithms:

- Model Predictive Control (MPC) Optimization: Traditional MPC implementations are computationally intensive. Our optimizations include reduced-order models to simplify dynamics, adaptive horizon scaling based on terrain, and hierarchical decomposition of control problems for parallel solving.

- Real-Time Gait Pattern Generation: We use a hybrid approach combining pre-computed gait libraries for quick retrieval, online interpolation to blend gaits based on conditions, and reactive modifications for obstacle avoidance and stability.

- Hardware-Aware Algorithm Design:

Leveraging Edge Computing

We utilize specialized processing units like GPU acceleration for matrix operations, dedicated AI accelerators for learned components, and FPGA implementation for critical control loops to significantly accelerate algorithms.

Memory Optimization

Techniques such as in-place operations, circular buffers, and quantization minimize memory usage, crucial for real-time performance on resource-constrained systems.

- Whole-Body Impulse Control: This approach formulates the control problem in task space, allowing for efficient computation of joint torques in high-degree-of-freedom systems while meeting task objectives.

- Terrain-Aware Footstep Planning: Our method combines A* search with heuristics for rapid exploration of footstep sequences, local optimization based on sensor data, and risk assessment for step feasibility over complex terrain.

Machine Learning Integration for Adaptability

While model-based control is primary, we strategically integrate machine learning to enhance performance and adaptability:

- Lightweight Neural Network Architectures: We use architectures like MobileNet-inspired depthwise separable convolutions for terrain classification, recurrent models (LSTMs) for state estimation, and knowledge distillation to compress large models for deployment.

- Online Learning and Adaptation: Our system adapts to new environments through online tuning of control parameters, continuous learning of surface properties for gait optimization, and real-time failure mode detection and mitigation.

Validated Performance on Resource-Constrained Hardware

Our optimized algorithms have demonstrated significant improvements. Beyond successfully running the PEC cycle at 500 Hz on a Raspberry Pi 4B, our validation on various hardware platforms shows:

- ARM Cortex-A78: A 40% reduction in CPU usage compared to baseline implementations.

- NVIDIA Jetson Orin: A 60% improvement in real-time factor using GPU acceleration.

- Custom FPGA: Achieved sub-millisecond control loop execution for critical functions.

These metrics, which include computation time, energy efficiency, stability, and adaptability, underscore our commitment to balancing computational complexity with control performance.

The Future is Efficient and Open

The development of efficient locomotion algorithms for low-power compute platforms is a critical advancement in robotics. By carefully crafting our software architecture and optimizing algorithms, we enable sophisticated robotic behaviors on hardware that is typically resource-constrained, without sacrificing energy efficiency or operational duration. We are excited by the continuous evolution in this field and are even considering releasing our software as open source soon, contributing to the broader robotics community.

Our approach ensures that the future of robotics lies not just in more powerful hardware, but in smarter algorithms that extract maximum performance from available computational resources, maintaining the reliability and safety essential for real-world deployment.